The Role of Power-Saving Features of LTE IoT and Design Choices in Maximizing Device Battery Life

Executive Summary

The needs of IoT are diverse. They range from very high speeds needed for applications such as video to extremely low speeds needed for sensors. They range from low latency for voice communications to large capacity for dense deployments. The biggest common requirement for almost all these device classes is lower power consumption and longer battery life.

LTE IoT, the low-power wide-area network (LPWAN) branch of LTE, simplifies device architecture by adding features like Power Save Mode (PSM) and extended Discontinuous Reception (eDRX) to lower power consumption. This simplified architecture was designed specifically for devices and applications that don’t generate a lot of data or need higher data speeds. These features allow engineers to design for maximum battery life by optimizing sleep cycles based on time of day, business hours, sleep houses, season, etc. Smart use of these features coupled with sound design practices can place battery life for typical LPWAN use cases, theoretically, in the 10-year range, depending on traffic patterns.

Watch the Webinar

Along with the precise application of the standards-based power-saving features, it is important that IoT original equipment manufacturers (OEMs) and service providers optimally design and dimension their device application requirements. This includes fine-tuning peak data speeds, data traffic, sleep cycles, patterns and battery capacity. Typically, module vendors make their modules very flexible, supporting the full range of possible specifications. So, it is incumbent upon the device vendors to make sure they properly specify their devices based on the use case.

This paper explains how LTE has technologies that scale up and down for the diverse IoT needs and discusses the optimizations adopted into LTE IoT LPWAN technologies (LTE-M and NB-IoT). It offers guidance about how to decide the best LTE technology by target use case and how to optimally design and provision IoT devices to maximize their battery life.

Diverse Needs of IoT Applications

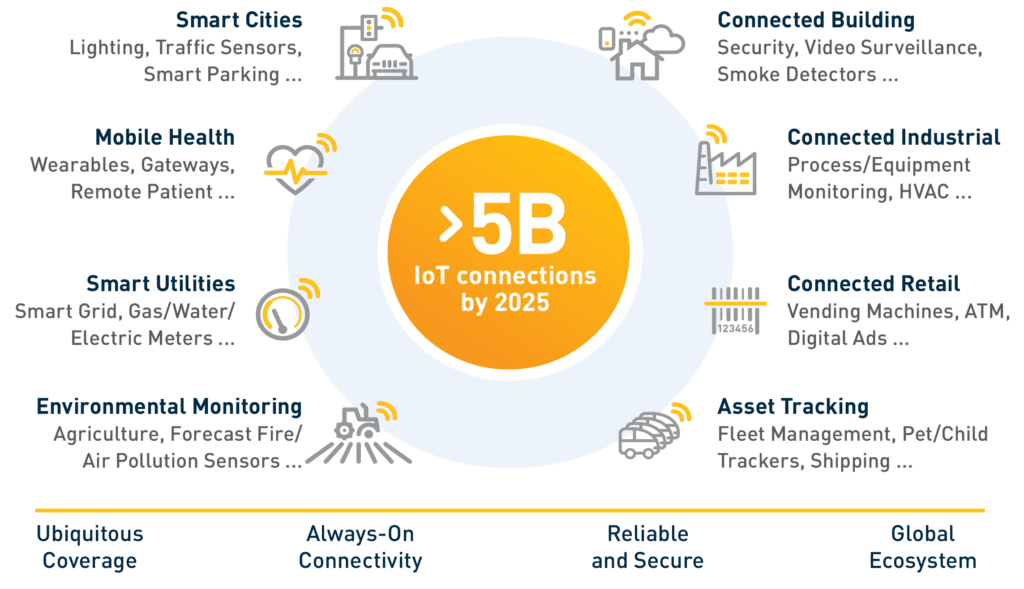

IoT, as a term, can be applied to almost anything that will be connected to the internet and, sometimes, surprisingly, things not connected to the internet. Cellular IoT specifically addresses a wide range of applications and services, including smart cities and industries, mobile health, etc., as seen in Figure 1.

This broad mix of applications has a diverse set of needs. Some applications, such as video surveillance or HD video streaming for industrial use, need very high speeds and huge amounts of bandwidth. Industrial or enterprise gateways might need a huge amount of data capacity. Simple agricultural sensors deployed in rural areas need expansive coverage, but they only consume a few bytes of data per event transmitted. Many such devices may not need seamless mobility. Some time-sensitive applications might need very low latency while devices deployed in logistics hubs and warehouses might need extreme connection density to efficiently support thousands of such devices in a very small space. All of this means that it is impossible to have a one-size-fits-all approach to IoT, be it networks or devices.

For cellular IoT devices specifically, some common needs span a full range of application possibilities. These include ubiquitous coverage so that users can deploy IoT services wherever and whenever they need and always-on connectivity so IoT devices are always available and connected. The need for reliability and security of the network, devices and services is imperative.

An important need for battery-powered IoT devices, no matter what the application or service, is low power consumption. Power economy results in either longer battery life or a reduction in the size and capacity of the battery pack needed. That further improves the cost and flexibility of the device. Responding to this market need, 3GPP, the cellular standards body, developed several features and optimizations to ensure the network device and applications vendors make the right trade-offs to maximize the device’s battery life.

Maximizing battery life relies on two main factors. The first is the selection of the LTE technology for the use case to provide devices with optimum performance. The second is the specification of device parameters typically associated with the bulk of power consumption, including peak speeds, the frequency of communication needed (i.e., sleep cycles), and the type and size of the battery pack the device can accommodate.

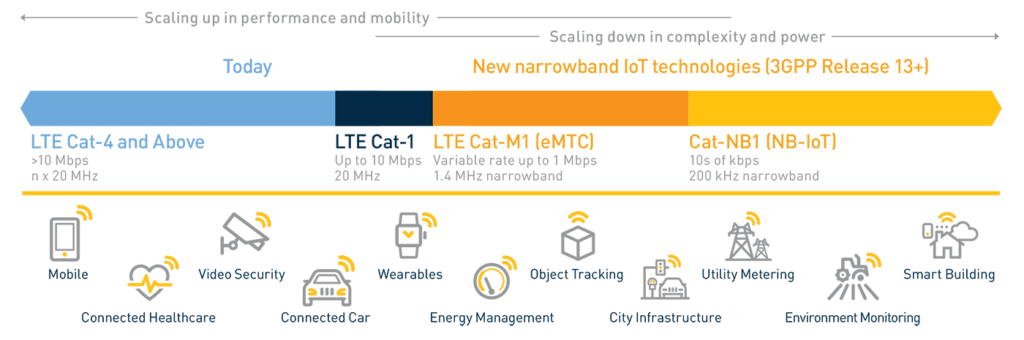

LTE Is Designed to Scale Across Diverse Needs of IoT

LTE was initially designed primarily for smartphones, for which getting higher speeds and seamless mobility was a necessity. It was assumed the device would be charged every day, even multiple times a day. However, when cellular considered moving into LPWAN, the focus had to include bursty and lowthroughput communications, ubiquitous coverage, latency tolerance, lower mobility and longer battery life, especially for the devices that can’t be charged every day. 3GPP has been evolving the standards that scale across many of these dimensions as shown in Figure 2.

On one side, LTE starts from Category (Cat) 1 supporting 10 Mbps peak speeds and scaling up to gigabit speeds of Cat 18 and multi-gigabit speeds of 5G, all with full mobility. On the other side, the tens of kbps of Cat NB1 and NB2 (aka NB-IoT) and hundreds of kbps of Cat M1 (LTE-M or LTE-M), all with either full or limited mobility. The higher you go on this scale, the higher the complexity and cost of the devices. The lower you go, the complexity and cost are lower, the battery life is longer, and the coverage is more expansive. Cat M1 and Cat NB1 offer in-building penetration coverage much better than higher category devices with up to 10 years of battery life.

With a wide range of technologies, LTE offers an ideal solution for every possible IoT use case. So, designers of IoT devices and systems select the most suitable technology for their needs. For example, devices such as broadband gateways will need the highest categories that are available. Devices like utility meters or sensors need lower categories that provide enough speeds. If these lower-speed devices also need good mobility and support for voice, then LTE-M might be the best solution and so forth.

IoT module vendors offer a wide range of modules to support all these speeds and technologies. It is incumbent upon the IoT device OEMs and system integrators to select the right module and, within the module, the right mix of features to optimize the performance of the device.

The Power-Saving Enhancements of LTE IoT

Moving from a smartphone-focused design mindset to one that addresses the needs of IoT, 3GPP worked on multiple dimensions. Its objective was to simplify the complexity involved in modern cellular smartphones. The simplification itself reduced the power consumption to a large extent, but they were also able to bring in many enhancements specific only to IoT devices.

Simplifying IoT Device Architecture

The fundamental step in simplifying the architecture was reducing the peak speed devices can support. This simplification meant no longer supporting features, such as carrier aggregation, higher-order modulation, MIMO and others. It also meant carrying narrower bandwidths — 1.4 MHz for LTE-M and 200 kHz for NB-IoT. LTE IoT also eliminates antenna diversity. There is only one antenna for transmitting and receiving and support for half-duplex communication, which means there is only one path for receiving and transmitting. The devices even carry lower order modulation, such as FSK, which is not suitable for higher speeds but okay for slower speeds of IoT. The simpler makeup of features means the memory needed in the devices is lower as well — hence more power savings.

There are many optimizations in the signaling and channel measurements. Many IoT devices don’t have to be in the constant monitoring state, which means many of the signaling steps involved in typical data calls can be eliminated. Similarly, devices may not need seamless mobility or rate adoption, which means the constant flurry of measurements the device sends to the network to maintain the best possible link round-the-clock can be significantly curtailed as well. All of this yields a substantial reduction in overall power consumption and thereby increases battery life.

Power Save Mode (PSM)

The best contributor to multiyear battery life is extending the sleep cycles of IoT devices. It is worthwhile to understand how paging for smartphones works to comprehend this better. Smartphones must always be awake to monitor paging messages and check whether any data is coming to them from the network. This monitoring could be on as long as a couple of seconds. Always keeping the chain energized to receive pages consumes lots of power and drains the battery. If you have ever wondered why your smartphone loses battery even when you don’t use it at all, this is the reason.

Now contrast that with devices such as water meters. These meters wake up only once or twice every day during a fixed time and send their data in the form of a meter reading and go back to sleep. On some occasions, such as abnormal consumption of water, which might indicate water leakage, they may also send a warning signal to the utility network. PSM is for applications that have device-originated data or scheduled transmissions.

PSM, as illustrated in Figure 3, allows the IoT equipment to wake up at fixed times, transmit data, monitor pages (for the next four idle frames) and go back to sleep. The device and the network negotiate the sleep time to optimize it to the application’s requirements. As the machine is dormant during the PSM, power consumption is lower, and battery life is longer. For example, an LTE-M (Cat M1) device that transmits once per day in full PSM mode could last well over ten years on two AA batteries.

If the device registered on the network, then there is no need to reestablish the connection when it wakes up during PSM time. Not having to reconnect minimizes signaling overhead, which reduces consumption.

The circuitry uses a considerable amount of power when waking up, so waking fewer times reduces power consumption. PSM allows the devices to both listen to paging messages and send data during the same session, further optimizing power usage.

One minor downside of PSM is that devices will be out of network reach during their sleep time. It is only suitable for applications that don’t require frequent network-initiated connectivity. Many, including some of the most numerous IoT applications, like utility meters, don’t have such needs.

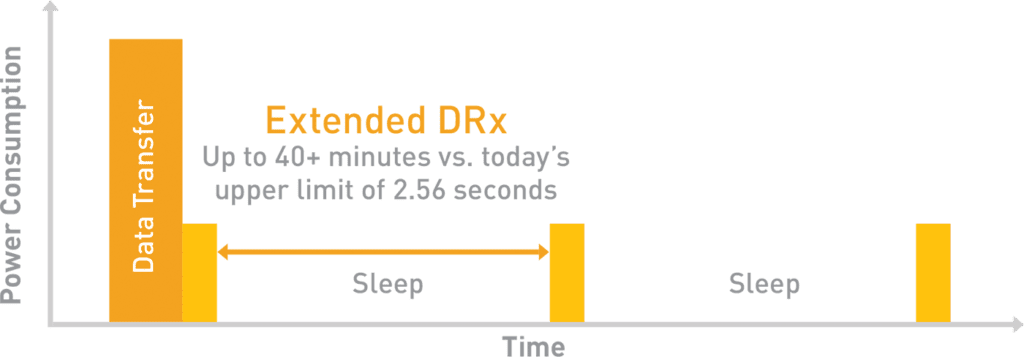

Extended Discontinuous Reception (eDRX)

eDRX is a sleep cycle-extending feature designed for applications that require network-initiated connection or mobile-terminated. Like PSM, eDRX allows devices to extend sleep cycles between paging, as shown in Figure 4.

With eDRX, instead of waking up every couple of seconds, devices can sleep longer. They wake up to check whether there is any data for them; if not, they quickly go back to sleep. If there is data, they establish the connection, transfer the data and go back to sleep. The sleep cycle timing can be as high as 10,485.76 seconds (~175 min). For an LTE-M device that transmits data once per day and wakes up about every ten minutes, a life of 4.7 years should be achievable on two AA batteries. Battery life can be much longer for lengthier sleep cycles. There are many applications in which eDRX is useful, like object tracking or the smart grid, in which devices must be reachable when needed but without immediacy, as would be necessary, for example, in a closed-loop control system.

Design Considerations to Maximize Battery Life

LTE has a variety of solutions for IoT, which might be overwhelming for cellular IoT adopters. Module vendors, to a great extent, simplify this process by providing solutions for specific types of use cases.

The first step for adopters is to fully understand the needs of the use case in terms of:

- Types of data speeds needed (100s of Mbps vs. 1000s of kbps vs. 10s of kbps)

- The amount of data traffic involved (GBs vs. MBs vs. KBs)

- Any minimum latency requirements

- Deployment scenarios or what kind of coverage to expect (e.g., above ground, in basements, urban areas, rural areas)

- What type of battery to use (large custom battery vs. AA, AAA vs. button cells)

- The ability to charge or replace the battery easily (frequent recharge possibility, inability to access the device to charge the batteries, etc.)

- Whether the applications have device-originated or device-terminating data

- Whether the devices need to be available for connections all the time or during scheduled times

Based on use case requirements and network considerations, like capacity, configuration, spectrum, etc., it is possible to determine what type of connection or LTE device category is best suited. The next step is to dimension the device for optimal performance and battery life. It is highly recommended to leverage the expertise of your cellular IoT module vendor considering the multidimensional nature of this review and selection criteria. Top-tier vendors have resources, like application engineering support organizations and offices, in most markets and countries globally.

After selecting the right IoT module to use, the next question to ask is which module has wide-ranging capabilities? Correctly configuring the devices is very important. For example, if the module supports both LTE-M and NB-IoT, the device design and engineering teams have to decide which one suits them better. LTE-M is ideal for slightly higher data rates, full mobility and bandwidth as well as applications needing voice support. NB-IoT is well suited for applications that are delay tolerant, are fixed or semi-fixed and will be operated from deep inside buildings and structures. The device design team must also decide what kinds of sleep cycles work best for them.

Design considerations are as important as the extensive features LTE provides to maximize IoT device battery life.

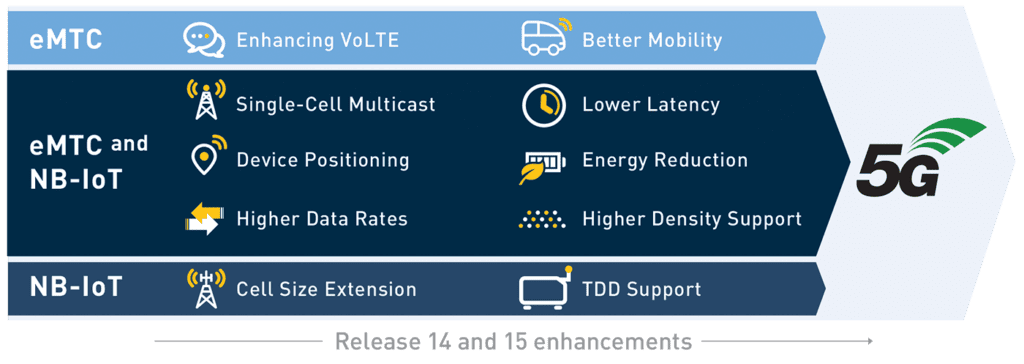

Continued LTE IoT Evolution

Most of the commercial mobile IoT networks today use 3GPP Release (Rel) 13. The evolution continues with Rel 14 and 15, which bring many new features that further improve performance and battery life as well as many other enhancements, as shown in Figure 5.

One key enhancement is higher data rates for both LTE-M and NB-IoT. For example, NB-IoT will support ~120 kbps in the downlink and ~160 kbps in the uplink through Cat NB2, a significant improvement over Cat NB1, which only maintained 10s of kbps. These speeds make NB-IoT suitable for more applications and provide better granularity, with speeds when selecting between NB-IoT and the 1 Mbps mark of LTE-M. An example of the usefulness of higher speeds for low-data applications is a firmware over-the-air (FOTA) upgrade. Usually, upgrade files are much bigger than regular IoT device data exchanges. So, during the upgrades, with the low speeds of NB1, the devices would have to be connected and switched on for a very long time, significantly reducing their overall battery life. However, with higher speeds, they can finish the download sooner and get back to sleep much faster. Likewise, the higher speeds of NB2 are beneficial for applications or use cases in which data traffic is higher than what can be supported by NB1 but still lower than LTE-M.

There are many more enhancements, such as coverage extension and TDD support, for NB-IoT and improved voice support (VoLTE) and better mobility for LTE-M. Moreover, many new capabilities are emerging, like single-cell broadcasting for efficiently delivering common data for multiple devices in a cell, precise device positioning and others that are applicable for both LTE-M and NB-IoT. These enhancements take the two standards well into the full 5G handoff point years from now. Designers should leverage these improvements with the understanding that each one comes with power trade-offs they must plan for. For example, a device design using power modeling for Rel 13 may result in one battery capacity that translates into weight and volume dimensions determining a certain physical design for the device’s enclosure. If there is an upgrade plan to Rel 14 or 15, it is important to spend time modeling power usage and battery life now — as best as can be done with advanced specifications — to ensure the next generation device can be made smaller and lighter.

Conclusion

Needs for IoT devices and applications are very different. 3GPP has devised a range of LTE technologies and device categories to address those diverse requirements. The solutions range from providing gigabit speeds to 10s of bytes of traffic, extended coverage and, most importantly, years of battery life. Device battery life is one of the most critical considerations for the most aggressively growing class of cellular IoT applications now. LTE has specific features designed especially for LPWAN applications. These include reducing the complexity of devices, thereby substantially increasing the sleep cycles using PSM, eDRX and other techniques. The future release of 3GPP further improves performance and battery life while also bringing new capabilities.

Properly designing and dimensioning devices to suit specific target use cases is also essential in maximizing the battery life of IoT devices. The global proliferation of LTE IoT is a testament to the versatility and adaptability of the standard for use cases across all industries. The cellular ecosystem continues to show strong growth while all branches of the LTE standard remain completely future-proof, boasting full forward compatibility with 5G.

The Industry’s Broadest Mobile IoT Module Portfolio

Dual-Mode LTE-M/NB-IoT and Single-Mode NB-IoT